The Challenge

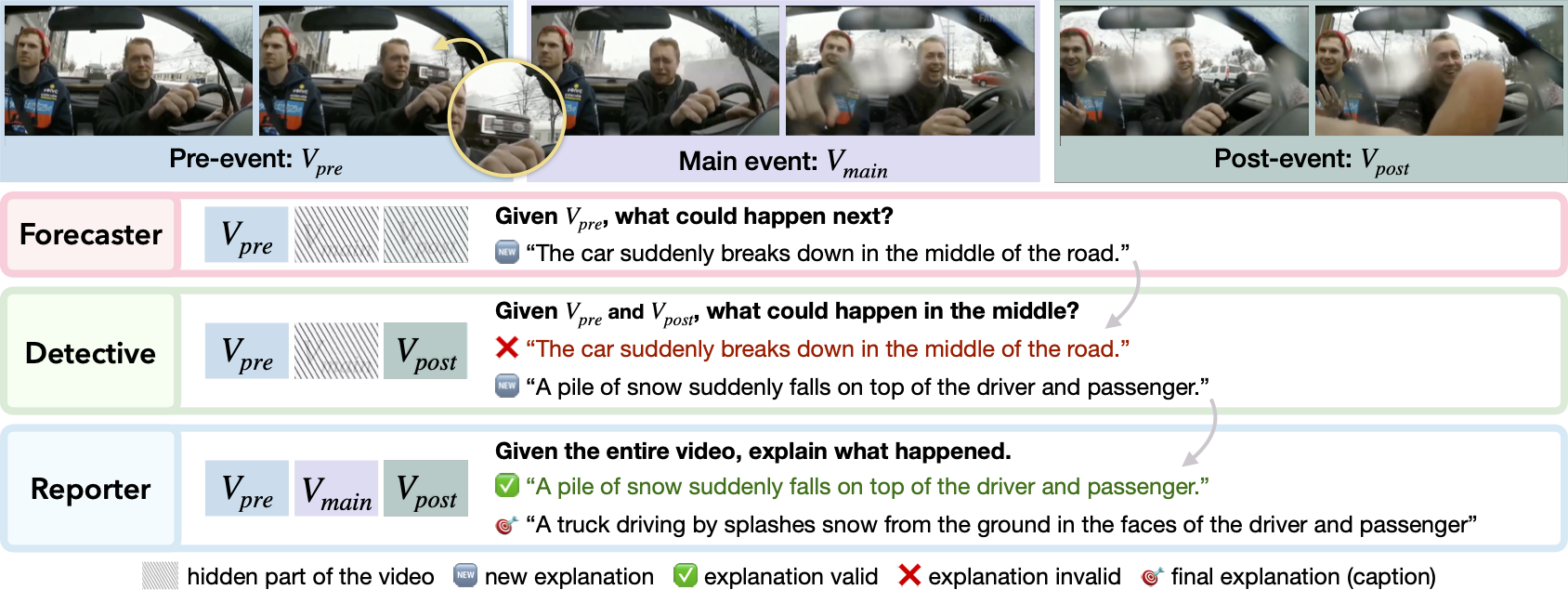

We introduce the BlackSwan Challenge, built upon the recently developed BlackSwanSuite benchmark presented at CVPR 2025. The benchmark evaluates how well VLMs can reason about unexpected events in videos through various tasks, including multiple-choice questions (MCQ). The challenge targets distinct reasoning skills:

Abductive Reasoning

Tasks probe models' ability to infer hidden or missing causes behind surprising events when only partial visual information is provided.

Defeasible Reasoning

Tasks assess whether models can revise their previous conclusions when presented with new evidence that contradicts their earlier assumptions.

The dataset comprises over 3,800 MCQ tasks across 1,655 videos, each containing atypical, counterintuitive, or unexpected visual situations. These scenarios are deliberately constructed to minimize the usefulness of memorized patterns, forcing models to reason instead of recall. BlackSwan is challenging for VLMs, with significant performance gaps of up to 32% from humans on these tasks.

Your goal is to beat existing video understanding models on the BlackSwan MCQ tasks for the Detective and Reporter variants of the benchmark. Submissions are evaluated on MCQ tasks only. Email your predictions to the organizers at cogvl2026@googlegroups.com to receive your scores. The best performing teams will be invited to present their methods at the workshop. See the Submission Details page for full instructions.